Please note: this is the first, much too complicated way I tried (and succeeded) to get the Mac Book Air Superdrive to work with my MacBook PRO. In the meantime, I found a much better, safer and easier way to do it. I kept this description here for those interested in the technical details of searching for and eventually finding a solution. If you just want to make your Mac Book Air Superdrive work, please see this post, and don't confuse yourself with the techy details below.

Warning: this is a hack, and it's not for the faint at heart. If you do anything of what I'll describe below, you are doing it entirely on your own risk. If the description below does not make at least a bit of sense to you, I would not recommend to try the recipe in the end.

The story is this - a while ago I replaced the built-in optical disk drive in my MacBook Pro 17" by an OptiBay (in the meantime, there are also alternatives) which allows to connect a second harddrive, or in my case, a SSD.

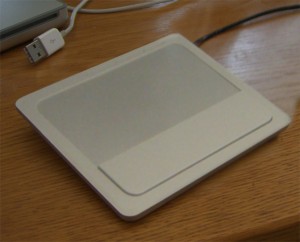

To be able to continue using the SuperDrive (Apple's name for the CD/DVD read/write drive), the Optibay came with an external USB case which worked fine, but was ugly. And I didn't want to carry that around, so I left it at home and bought a shiny new MacBook Air SuperDrive for the office.

It just didn't occur to me that this thing could possibly not just work with any Mac, so I didn't even ask before buying. I knew that many third-party USB optical drives work fine, so I just assumed that would be the same for the Apple drive. But I had to learn otherwise. This drive only works for Macs which, in their original form, do not have an optical drive. Which are the MacBook Airs and the new Minis.

But why doesn't it work? Seaching the net, among a lot of inaccurate speculations, I found a very informative blog post from 2008 which pretty much explains everything and even provides a hardware solution - replacing the Apple specific USB-to-IDE bridge within the drive with a standard part.

However, I was challenged to find a software solution. I could not believe that there's a technical reason for not using that drive as-is in any Mac.

There are a lot of good reasons for Apple not to allow it - first and foremost avoiding complexity of possibly multiple CD/DVD drives, confusing users and creating support cases.

So I though it must be the driver intentionally blocking it, and a quick look into the console revealed that in fact it is, undisguised:

2011/10/27 5:32:37.000 PM kernel: The MacBook Air SuperDrive is not supported on this Mac.

Apparently the driver knows that drive, and refuses to handle it if it runs on the "wrong" Mac. From there, it was not too much work. The actual driver for Optical Disk Drives (ODD) is /System/Library/Extensions/ AppleStorageDrivers.kext/Contents/PlugIns/AppleUSBODD.kext

I fed it to the IDA evaluation version, searched in the strings for the message from the console, found where that text is used and once I saw the code nearby it was very clear how it works - the driver detects the MBA Superdrive, and then checks if it is running on a MBA or a Mini. If not, it prints that message and exits. It's one conditional jump that must be made unconditional (to tell the driver: no matter what Mac, just use the drive!). In i386 opcode this means replacing a single 0x75 byte with 0xEB. IDA could tell me which one this was in the 32-bit version of the binary, and the nice 0xED hex editor allowed me to patch it. Only, most modern Macs run in 64-bit mode, and the evaluation version of IDA cannot disassemble these. So I had to search the hexdump of the driver for the same code sequence (similar, but not identical hex codes) in the 64-bit part of the driver. Luckily, that driver is not huge, and there was a pretty unique byte sequence that identified the location in both 32 and 64 bit. So the other byte location to patch was found. I patched both with 0xED - and the MacBook Air SuperDrive was working instantly with my MBP!

Now for the recipe (again - be warned, following it is entirely your own risk, and remember sudo is the tool which lifts all restrictions, you can easily and completely destroy your OS installation and data with it):

- Make sure you have Mac OS X 10.7.2 (Lion), Build 11C74. The patch locations are highly specific for a build of the driver, it is very unlikely it will work without modification in any other version of Mac OS X.

- get 0xED or any other hex editor of your choice

- Open a terminal

- Go to the location where all the storage kexts are (which is within an umbrella kext called AppleStorageDrivers.kext)

cd /System/Library/Extensions/AppleStorageDrivers.kext/Contents/PlugIns - Make a copy of your original AppleUSBODD.kext (to the desktop for now, store in a safe place later - in case something goes wrong you can copy it back!)

sudo cp -R AppleUSBODD.kext ~/Desktop - Make the binary file writable so you can patch it:

sudo chmod 666 AppleUSBODD.kext/Contents/MacOS/AppleUSBODD - Use the hex editor to open the file AppleUSBODD.kext/Contents/MacOS/AppleUSBODD

- Patch:

at file offset 0x1CF8, convert 0x75 into 0xEB

at file offset 0xBB25, convert 0x75 into 0xEB

(if you find something else than 0x75 at these locations, you probably have another version of Mac OS X or the driver. If so, don't patch, or it means asking for serious trouble) - Save the patched file

- Remove the signature. I was very surprised that there's nothing more to it, to make the patched kext load:

sudo rm -R AppleUSBODD.kext/Contents/_CodeSignature - Restore the permissions, and make sure the owner is root:wheel, in case your hex editor has modified it.

sudo chmod 644 AppleUSBODD.kext/Contents/MacOS/AppleUSBODD

sudo chown root:wheel AppleUSBODD.kext/Contents/MacOS/AppleUSBODD - Make a copy of that patched driver to a safe place. In case a system update overwites the driver with a new unpatched build, chances are high you can just copy this patched version back to make the external SuperDrive work again.

- Plug in the drive and enjoy! (If it does not work right away, restart the machine once).

PS: Don't ask me for a download of the patched version - That's Apple's code, the only way is DIY!